From Pixels to Perception: The AI-Powered Revolution in Android Camera Technology

The Unseen Revolution: How Your Android Phone’s Camera Became a Genius

Remember the early days of Android phones? Taking a photo was a gamble. You’d tap the screen, wait for the shuddering shutter animation, and hope the blurry, pixelated result was somewhat recognizable. Those cameras were simple digital eyes, capturing light in a crude, straightforward manner. Today, the camera on your flagship Android phone is less of an eye and more of a brain. It’s an intricate system of advanced hardware and sophisticated artificial intelligence working in concert to not just capture a scene, but to interpret, understand, and enhance it. This transformation from a simple sensor to a perceptual engine is one of the most significant advancements in consumer technology, driven by relentless innovation in the world of Android.

This article delves into the incredible journey of Android camera technology, moving beyond the tired “megapixel wars” to explore the true revolutions happening behind the lens. We’ll dissect the fusion of groundbreaking hardware with computational magic, explore how different brands are tackling the same challenges, and provide actionable insights for you to get the most out of the powerful imaging gadget in your pocket. This isn’t just a story about specs; it’s the story of how Android phones learned to see.

Section 1: The Hardware Renaissance – Forging a Better Eye

For years, the primary metric for a good smartphone camera was the megapixel count. This marketing-driven “megapixel war” led to a misunderstanding of what truly makes a great photo. While resolution is important, the latest Android news confirms that the industry has shifted its focus to more meaningful hardware advancements. The new battleground is defined by sensor size, lens ingenuity, and dedicated processing power.

The Sensor Size Supremacy: Why Bigger is Genuinely Better

The single most important piece of hardware in any camera is the image sensor. Think of it as a digital canvas that collects light. A larger canvas can collect more light, more accurately. For years, smartphone sensors were tiny to fit into slim chassis. Now, leading Android phones are pushing the boundaries with massive sensors, some reaching the coveted “1-inch type” size, previously reserved for premium standalone cameras. The Xiaomi 14 Ultra and Sony Xperia PRO-I are prime examples.

What does a larger sensor actually do for you?

- Superior Low-Light Performance: With a larger surface area, the sensor’s individual pixels (photodiodes) can be larger, allowing them to capture more photons (light particles) with less noise (digital grain). The result is cleaner, brighter, and more detailed photos in dimly lit environments like restaurants or city streets at night.

- Enhanced Dynamic Range: A larger sensor can capture a wider range of brightness levels in a single shot, from the darkest shadows to the brightest highlights. This means less “blown-out” skies and more detail recovered from shadowy areas, creating a more balanced and realistic image.

- Natural Bokeh: A larger sensor, combined with a wide aperture lens, produces a shallower depth of field. This creates a beautiful, natural-looking background blur (bokeh) that makes your subject pop, without relying solely on software-based Portrait Mode.

–

–

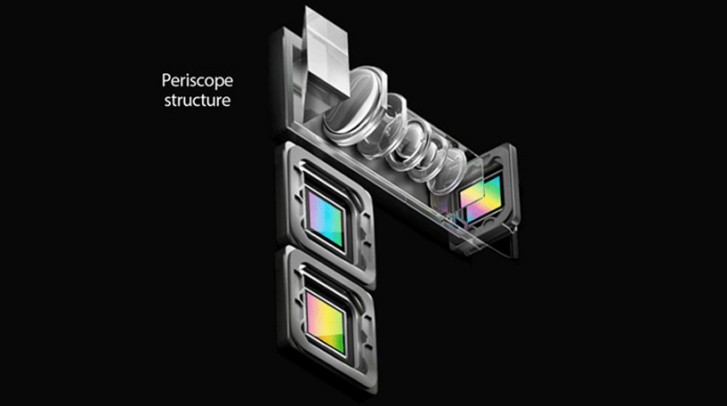

The Lens Revolution: Periscopes and Variable Apertures

The physical thinness of a smartphone is the eternal enemy of optical zoom. To overcome this, engineers developed the periscope lens. First popularized in Android phones by brands like Huawei and Samsung, this technology uses a prism to bend light 90 degrees, allowing a longer array of lenses to be laid flat inside the phone’s body. This is the magic behind the incredible 10x optical zoom on the Samsung Galaxy S Ultra series, enabling crisp shots of distant subjects that were once impossible on a phone.

Another exciting innovation is the return of the variable aperture. An aperture is the opening in the lens that controls how much light reaches the sensor. Most phones have a fixed aperture, but devices like the Xiaomi 14 Ultra feature a physical, stepping variable aperture (e.g., from f/1.63 to f/4.0). This gives the user creative control. A wide aperture (f/1.63) is great for low light and portrait shots with blurry backgrounds, while a narrow aperture (f/4.0) is ideal for landscape photography where you want everything from the foreground to the background to be in sharp focus.

Section 2: Computational Photography – The Ghost in the Machine

If hardware is the eye, computational photography is the brain. This is where Android phones, particularly Google’s Pixel line, truly rewrote the rules of photography. Instead of relying on a single “perfect” shot, these techniques involve capturing vast amounts of data in an instant and using powerful algorithms to construct an image far better than any single exposure could be.

The Magic of HDR+ and Night Sight

Google’s High Dynamic Range (HDR+) technology was a game-changer. When you press the shutter button on a Pixel phone, it doesn’t just take one photo. It rapidly captures a burst of short, underexposed frames. It then analyzes these frames, aligns them to correct for handshake, and intelligently merges them. This process averages out noise, dramatically increases detail in shadows and highlights, and produces a final image with stunning dynamic range. It’s why a Pixel can capture a person’s face clearly even when they are standing in front of a bright sunset.

Night Sight is the logical extension of this. In near-darkness, it captures an even longer burst of frames (up to 15 over several seconds) and uses sophisticated AI to merge them. This process is so powerful it can produce a bright, detailed, and colorful photo in conditions where the human eye can barely see, all without the harshness of a flash.

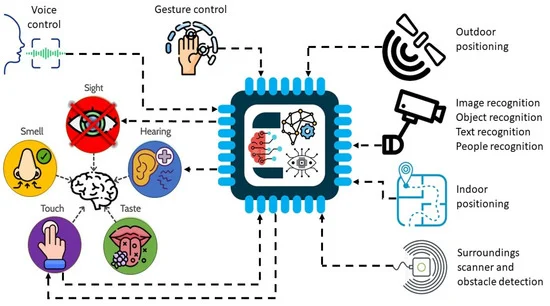

AI Scene Understanding: Semantic Segmentation

Modern Android gadgets have moved beyond simply optimizing the whole photo. They now use AI to understand *what’s in the photo*. This is called semantic segmentation. The phone’s processor identifies and isolates different elements—a face, the sky, a tree, a building, a plate of food. Once identified, it can apply targeted adjustments.

- Real-World Example: You take a photo of a friend at the beach. The phone recognizes your friend’s face, the blue sky, and the sandy beach. It might subtly smooth skin tones on the face, increase the saturation and contrast of the sky to make it a deeper blue, and enhance the texture of the sand—all independently within the same photo. This avoids the old problem of a “one-size-fits-all” filter that might make the sky look great but give your friend an unnatural skin tone. Google’s Magic Editor takes this a step further, allowing you to select and move these segmented objects after the shot is taken.

Section 3: The Great Fusion – Where Hardware and AI Collide

The current era of Android camera technology is defined by the fusion of cutting-edge hardware and intelligent software. Different manufacturers approach this synergy with unique philosophies, creating a fascinatingly diverse market for Android phones.

Case Study 1: The Google Pixel – Software Supremacy

For years, Google’s Pixel phones used what many considered to be dated camera hardware. Yet, they consistently ranked among the best camera phones. How? By being the undisputed master of computational photography. The Pixel’s strength lies in its consistency and reliability. You can point, shoot, and trust that Google’s processing, powered by its custom Tensor chip, will deliver a fantastic, well-balanced image nearly every time. The Pixel is the ultimate “fire and forget” camera, prioritizing a clean, true-to-life look over hyper-saturated or overly processed results.

Case Study 2: The Samsung Galaxy S Ultra – Hardware Overkill

Samsung takes the opposite approach: “more is more.” The Galaxy S Ultra line is a showcase of hardware prowess. It packs the highest megapixel counts (200MP), the most complex lens systems (two separate telephoto lenses), and the longest zoom range (100x “Space Zoom”). This hardware provides incredible versatility. While the Pixel excels at the 1x to 5x range, the Galaxy S Ultra lets you capture details from a city block away. Samsung’s software then works to tame this powerful hardware, using AI for scene optimization, advanced pixel binning (combining multiple pixels into one “super pixel” for better light capture), and processing the massive 200MP files into manageable, detailed shots.

Case Study 3: The Chinese Challengers (Xiaomi, Vivo) – Pushing the Envelope

Brands like Xiaomi, Vivo, and Oppo are carving out a middle ground, often being the first to introduce next-generation hardware. They are leading the charge with 1-inch sensors and forming strategic partnerships with legacy camera brands like Leica (Xiaomi) and Zeiss (Vivo). This combines the best of both worlds: incredible hardware potential from massive sensors and bespoke lenses, coupled with unique software tuning and color science from their camera partners. These phones often appeal to enthusiasts who enjoy the distinct “Leica look” or want the absolute best hardware foundation to build upon.

Section 4: A Practical Guide for the Modern Android Photographer

Having a powerful camera is one thing; knowing how to use it is another. Here are some tips, best practices, and recommendations to help you master your Android phone’s camera.

Best Practices and Actionable Tips

- Master Pro/Expert Mode: Don’t be intimidated by “Pro” mode. Focus on three key settings:

- ISO: This is the sensor’s sensitivity to light. Keep it as low as possible (e.g., 50-100) in good light for the cleanest images. Only raise it in dark situations.

- Shutter Speed: This is how long the sensor is exposed to light. Use a fast speed (e.g., 1/1000s) to freeze motion (kids, sports) and a slow speed (e.g., 1/15s, requires a steady hand or tripod) to create motion blur (light trails, waterfalls).

- Shoot in RAW: If you enjoy editing, shoot in RAW (or Expert RAW on Samsung). A RAW file is like a digital negative; it captures all the unprocessed data from the sensor, giving you far more flexibility to adjust exposure, colors, and white balance in editing apps like Adobe Lightroom Mobile.

- Beware the Pitfalls of AI: AI is great, but it can be overzealous. Sometimes, “Scene Optimizer” can make colors look cartoonishly vibrant, or “Face Retouching” can make skin look unnaturally smooth. Learn where these settings are on your phone and don’t be afraid to turn them off for a more natural, authentic photo.

- Clean Your Lens: This is the simplest yet most overlooked tip. Your phone’s lens is constantly being smudged by fingerprints from your pocket or bag. A quick wipe with a microfiber cloth can be the difference between a hazy, soft photo and a sharp, clear one.

Recommendations: Choosing the Right Phone for You

The “best” camera phone is subjective and depends on your needs.

- For the Point-and-Shoot Purist: The Google Pixel series is your best bet. Its reliability and true-to-life image processing are unmatched for capturing great photos with zero fuss.

- For the Versatility Seeker: The Samsung Galaxy S Ultra series is the king of versatility. If you value having an incredible zoom lens for concerts, sports, or wildlife, no other phone comes close.

- For the Hardware Enthusiast: Look towards flagship offerings from Xiaomi or Vivo. If you are excited by the prospect of a 1-inch sensor, a variable aperture, and unique color profiles from Leica or Zeiss, these Android gadgets offer a more hands-on, enthusiast-grade experience.

Conclusion: The Intelligent Image

The evolution of the Android camera is a testament to the power of combining raw physical innovation with intelligent software. We’ve moved far beyond the simple race for more megapixels. Today’s battle is fought with photons on large sensors, with algorithms in custom silicon, and with light bent through ingenious periscope lenses. The result is a device in your pocket that doesn’t just take a picture of what’s in front of it; it understands it. It knows the difference between a sky and a face, between dusk and dawn, between a static landscape and a fleeting moment. As AI continues to evolve, the future promises even more intelligent, intuitive, and powerful imaging tools. For now, the best camera is truly the one you have with you, and thanks to these incredible advancements in the world of Android phones, that camera is more of a creative partner than ever before.