Beyond Megapixels: The AI-Powered Revolution in Android Camera Technology

Remember the early days of Android phones? Taking a photo was a gamble. The results were often a grainy, blurry memory of a moment, a far cry from the crisp images of even a basic point-and-shoot camera. Fast forward to today, and the conversation has completely flipped. The latest flagship Android phones from Google, Samsung, and Xiaomi aren’t just competing with dedicated cameras; in many scenarios, they’re surpassing them. This dramatic leap isn’t just about cramming more megapixels onto a tiny sensor. It’s a story of a deep, symbiotic revolution—a fusion of groundbreaking hardware advancements and the sheer brute force of computational photography, all powered by artificial intelligence. This is the new frontier of mobile imaging, a key battleground that dominates the latest Android News and defines the appeal of premium Android Phones. In this article, we’ll peel back the layers of marketing jargon to explore the technologies that have transformed the camera in your pocket into an intelligent imaging powerhouse.

The Hardware Renaissance: Building a Better Digital Eye

For years, the smartphone camera race was deceptively simple: more megapixels meant a better camera. While that marketing-friendly metric still grabs headlines, the true hardware revolution is happening in areas that are far more impactful. The physical components of today’s Android cameras are more sophisticated than ever, laying the essential groundwork for software magic to happen.

The Megapixel Myth vs. Sensor Size Reality

The most significant hardware advancement is arguably the widespread adoption of larger image sensors. Think of a camera sensor as a bucket in the rain; a larger bucket catches more raindrops (photons of light). A larger sensor, like the 1-inch type sensor found in devices like the Xiaomi 14 Ultra, can capture significantly more light than a smaller one. This translates directly into tangible benefits: better performance in low light, less digital noise (grain), a wider dynamic range (more detail in both shadows and highlights), and a more natural-looking background blur (bokeh) without needing to rely solely on portrait mode software. This is why a 50MP photo from a phone with a large sensor often looks drastically better than a 200MP photo from a phone with a smaller sensor. Manufacturers use a technique called “pixel binning” to get the best of both worlds. A 200MP sensor on a Samsung Galaxy S24 Ultra, for example, can combine 16 neighboring pixels into one “super pixel,” effectively acting like a larger sensor to produce a brilliant, light-rich 12.5MP image.

Reaching Further: The Periscope and Variable Aperture Revolution

The laws of physics present a major challenge for smartphones: how do you achieve powerful optical zoom in a device less than a centimeter thick? The answer is the periscope lens. This ingenious piece of engineering, seen in flagships like the Google Pixel 8 Pro and Samsung Galaxy S24 Ultra, uses a prism to bend light 90 degrees, allowing the lens elements to be laid out horizontally inside the phone’s body. This enables incredible levels of true optical zoom—5x or even 10x—without the significant image degradation that comes from digital zoom. It means you can capture a sharp, detailed photo of a distant subject without physically moving closer. Another pro-level feature making its way into Android Gadgets is the variable aperture. Devices like the Xiaomi 14 Ultra feature a mechanical aperture that can physically change size (e.g., from f/1.6 to f/4.0). A wider aperture (smaller f-number) lets in more light and creates a shallow depth of field, while a narrower aperture lets in less light and keeps more of the scene in sharp focus, giving photographers creative control previously reserved for DSLRs.

The Computational Core: Where Software Becomes Magic

If advanced hardware is the eye, then computational photography is the brain. This is where Android phones truly differentiate themselves from traditional cameras. Instead of capturing a single instance in time, a modern smartphone camera captures a torrent of data before, during, and after you press the shutter button, using sophisticated algorithms and AI to assemble the perfect final image.

HDR+ and Beyond: The Art of Image Stacking

The technique that started it all is image stacking, pioneered by Google with its HDR+ technology. When you take a photo on a Pixel phone, it doesn’t just take one picture. It rapidly captures a burst of multiple short-exposure frames. The phone’s processor then aligns these frames to compensate for handshake, averages them out to reduce noise, and merges them to create a single image with an astonishingly wide dynamic range. This is why a Pixel can capture a backlit person against a bright sunset and still show detail in their face and the colors in the sky—a feat that would challenge many dedicated cameras. This same principle is the foundation for low-light modes like Google’s Night Sight and Samsung’s Nightography, which stack even more frames over a longer period to “see in the dark.”

AI-Powered Scene Recognition and Semantic Segmentation

This is where the “intelligence” in AI photography shines. Modern Android Phones don’t just see a collection of pixels; their processors identify the individual components of a scene. This is called semantic segmentation. The AI recognizes the sky, trees, a person’s face, their hair, their clothes, and the food on their plate. It then applies tailored, localized adjustments to each element simultaneously. It might subtly increase the saturation of the trees, make the sky a deeper blue, smooth the skin on the person’s face while sharpening their eyes, and boost the texture of the food—all within a single press of the shutter. This intelligent, context-aware processing is what gives modern smartphone photos their characteristic “pop” and polish, making them instantly shareable.

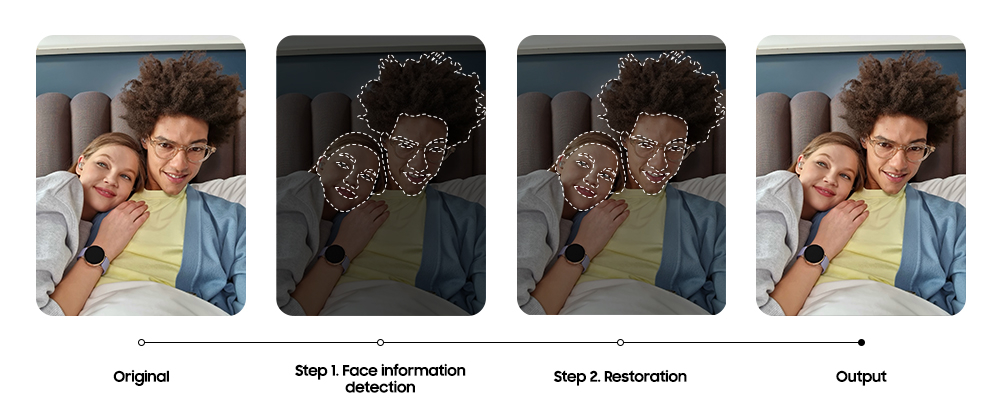

The Generative AI Era: Magic Eraser and Best Take

The latest wave of innovation, frequently highlighted in Android News, is the integration of generative AI. Google’s Magic Eraser is a prime example. It goes beyond a simple clone stamp tool; when you circle an unwanted object or person in a photo, the AI analyzes the surrounding environment and generates entirely new pixels to fill in the space realistically. Similarly, the “Best Take” feature addresses the age-old problem of group photos. It takes a burst of shots and then allows you to swap out individual faces from different frames, ensuring everyone has their eyes open and is smiling in the final composite image. These are powerful editing tools that are becoming seamlessly integrated into the default camera experience.

The Symbiotic Relationship: When Hardware and Software Dance

The ultimate camera experience isn’t about having the best hardware or the smartest software; it’s about how perfectly the two are integrated. Different manufacturers take different philosophical approaches to achieving this synergy, resulting in unique photographic systems with distinct strengths.

Case Study 1: The Google Pixel – The Software King

For years, Google’s Pixel line was the quintessential example of software triumphing over hardware. They used relatively modest camera sensors but produced class-leading results thanks to their mastery of computational photography. Their custom Tensor chipset is designed specifically to accelerate their AI and machine learning models, making features like HDR+ and Night Sight incredibly fast and effective. With recent models like the Pixel 8 Pro, Google has finally paired its software prowess with top-tier hardware, including a large main sensor and a 5x periscope telephoto lens. This combination makes the Pixel a formidable and reliable point-and-shoot camera that consistently produces stunning, true-to-life images in almost any condition.

Case Study 2: The Samsung Galaxy S Ultra – The Hardware Juggernaut

Samsung’s approach is one of ultimate versatility, driven by a “more is more” hardware philosophy. The Galaxy S Ultra series is known for pushing the boundaries with 200MP sensors and complex multi-lens zoom systems, including multiple telephoto lenses to cover a vast focal range. Their software and AI are tuned to leverage this immense hardware power. The result is a camera system that offers unparalleled flexibility. You can capture a sweeping ultrawide landscape, a standard high-resolution shot, a crisp 10x optical zoom photo of a distant bird, and even a usable 30x or 100x “Space Zoom” image of the moon, all from the same device. Their new Galaxy AI features further enhance this, offering smart editing suggestions and generative fill capabilities.

Putting It All to Use: Tips for the Everyday Photographer

Understanding the technology in your Android phone is the first step. The next is knowing how to leverage it to capture better photos. These practical tips can help you make the most of your device’s advanced camera system.

Best Practices for Getting the Best Shot

- Trust the AI, But Know When to Override: For 90% of scenarios, the default point-and-shoot mode is your best friend. The phone’s AI is designed to analyze the scene and produce a great-looking image automatically. Let it do the heavy lifting.

- Master Pro/Expert Mode: For creative shots like light trails from cars at night or intentionally blurring a waterfall, switch to Pro mode. Learning the basics of ISO (sensitivity to light), Shutter Speed (how long the sensor is exposed), and White Balance can unlock a new level of creative control.

- Shoot in RAW: If you enjoy editing your photos, use the RAW or Expert RAW mode. A RAW file captures all the unprocessed data from the sensor, giving you far more flexibility to adjust exposure, colors, and details in editing software like Adobe Lightroom without losing quality.

Common Pitfalls to Avoid

- The Digital Zoom Trap: Be mindful of your phone’s optical zoom limit (e.g., 3x, 5x). Zooming beyond this point is digital zoom, which is just cropping the image and will always result in a loss of quality. Use your feet to get closer whenever possible.

- Ignoring Your Lenses: Don’t just pinch-to-zoom from the 1x view. Your phone has physically separate ultrawide, wide, and telephoto lenses. Tap the on-screen buttons (e.g., 0.6x, 1x, 5x) to switch to the correct lens for the job to ensure you’re getting the best possible optical quality for your desired framing.

- Forgetting to Clean the Lens: This is the simplest yet most overlooked tip. A fingerprint or smudge on your camera lens is the number one cause of hazy, soft, or flared photos. A quick wipe with a microfiber cloth can make a world of difference.

Conclusion: The Intelligent Image

The evolution of the Android camera is a remarkable story of technological convergence. We’ve moved far beyond the simplistic megapixel wars into a new era defined by the intelligent interplay of light-gathering physics and predictive, creative software. The best camera system is no longer just a piece of hardware; it’s an ecosystem where massive sensors and periscope lenses provide a rich canvas of data, and sophisticated AI algorithms act as the artist, painting a final picture that is often better than what the human eye perceived. As we look to the future of Android Phones, we can expect this trend to accelerate, with even more powerful AI, real-time video processing that rivals still photography, and new hardware innovations. The camera in your pocket is not just a tool for capturing memories; it’s one of the most advanced and accessible imaging Android Gadgets ever created, and its potential is only just beginning to be fully realized.