The Gemini Revolution: How Google’s New AI is Redefining Android Phones and the Mobile Experience

The Next Leap in Mobile Intelligence: Google’s Gemini Arrives on Smartphones

For years, the digital assistants on our smartphones have operated on a simple premise: you ask, they answer. From setting timers to checking the weather, Google Assistant, Siri, and Bixby have become competent, if somewhat rigid, digital butlers. However, the landscape of artificial intelligence is undergoing a seismic shift, moving from pre-programmed commands to generative, conversational understanding. This evolution is now arriving directly in our pockets. The latest development in Android News is the widespread rollout of Google’s Gemini, a powerful suite of AI models designed to replace and vastly expand upon the capabilities of the traditional Google Assistant. This isn’t just a rebranding or a minor update; it represents a fundamental change in how we will interact with our Android phones and the broader digital world. By integrating its most advanced AI directly into the mobile operating system, Google is setting a new benchmark for what a smart device can be—transforming it from a passive tool into a proactive, creative, and deeply personalized partner. This move not only challenges Apple’s Siri on its home turf but also signals the beginning of a new era for the entire ecosystem of Android gadgets.

Section 1: Understanding the Gemini Rollout and Its Core Features

The transition from Google Assistant to Gemini is a multifaceted process that varies by platform and user choice. It’s crucial to understand the mechanics of this rollout to appreciate its full impact. Unlike a simple app update, this involves a deep integration into the operating system, fundamentally altering the user’s primary method of interacting with their device through voice and text.

The Two Paths of Integration: Android vs. iOS

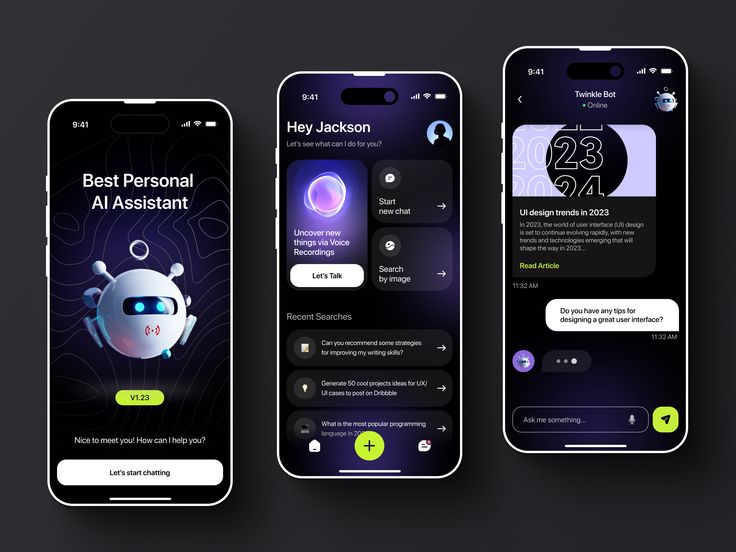

Google has adopted a bifurcated strategy for bringing Gemini to the two dominant mobile platforms. For users of modern Android phones, Gemini is available as a dedicated, standalone application from the Google Play Store. Upon installation, it offers to replace Google Assistant as the default digital assistant on the device. When a user opts in, long-pressing the power button or using the “Hey Google” hotword will now invoke the Gemini interface instead of the classic Assistant overlay. This native integration allows Gemini to have deeper access to system-level functions and contextual information from other apps, promising a more seamless and powerful experience.

On iOS, the approach is necessarily more constrained due to Apple’s platform restrictions. Gemini is not a standalone app but is instead integrated as a new tab within the existing Google app. iPhone users can toggle to the Gemini interface to access its conversational and generative AI features. While powerful, this implementation is less integrated than its Android counterpart. It cannot be set as the default system-wide assistant and lacks the ability to directly control device settings or interact with other apps in the same way. This highlights a key strategic advantage for the Android ecosystem in the burgeoning AI wars.

Gemini vs. Gemini Advanced: Understanding the Tiers

Not all Gemini experiences are created equal. Google is offering its AI in two primary tiers, which directly impacts the capabilities available to the user:

- Gemini (Standard): The free version is powered by the Gemini Pro model. This is a highly capable large language model (LLM) that excels at tasks like summarizing articles, drafting emails, brainstorming ideas, and engaging in complex, multi-turn conversations. For the vast majority of users, Gemini Pro offers a significant leap in intelligence and utility over the old Google Assistant.

- Gemini Advanced: This premium tier requires a subscription to the Google One AI Premium plan. It gives users access to Gemini 1.0 Ultra, Google’s most powerful and capable model. Gemini Ultra demonstrates superior performance in complex reasoning, following nuanced instructions, coding, and creative collaboration. It’s designed for power users, developers, and creative professionals who need the absolute cutting edge of AI performance for their tasks.

Section 2: A Technical Breakdown of Gemini’s Mobile Capabilities

The true significance of Gemini lies not just in what it does, but how it does it. Its underlying architecture represents a generational leap over the natural language processing (NLP) models that powered previous assistants. This technical superiority is what enables a richer, more intuitive user experience on Android phones and other devices.

The Power of Multimodality

Perhaps the most significant technical advantage of Gemini is that it was built from the ground up to be natively multimodal. This means it can seamlessly understand, process, and combine different types of information—text, images, audio, and code—simultaneously. Previous assistants could handle these inputs separately (e.g., use Google Lens to identify an object, then perform a text search), but Gemini can reason across them in a single query.

Real-World Scenario: A user is at a hardware store and is unsure which screw to buy for a piece of furniture they are assembling.

- Old Assistant Workflow: Take a picture of the instruction manual’s diagram. Open Google Lens to identify the screw type. Open a new search to find where to buy it. This involves multiple steps and apps.

- Gemini Workflow: The user can simply open Gemini, take a picture of the instruction diagram and the bin of screws, and ask, “Which of these screws do I need for this diagram, and what is it called?” Gemini can analyze the visual information from both the diagram and the real-world screws, cross-reference the data, and provide a direct, actionable answer.

This ability to synthesize information from different modalities unlocks countless new use cases, from generating social media captions for a photo to helping a student solve a math problem by simply showing it a picture of their textbook.

Contextual Awareness and Conversational Memory

A major limitation of older assistants was their lack of conversational memory. Each query was often treated as a new, isolated event. Gemini, as a true LLM, excels at maintaining context throughout a conversation. Users can ask follow-up questions, make clarifications, and refer to previous parts of the dialogue without having to restate the entire query. This makes interactions feel less like a series of commands and more like a natural conversation. For example, you can ask, “Show me some good Italian restaurants nearby,” and follow up with, “Which of those are open past 10 PM and have outdoor seating?” without having to mention “Italian restaurants” again. This contextual retention is critical for complex tasks like planning a multi-day trip or outlining a detailed project.

Section 3: Practical Implications and the User Experience Overhaul

The shift to Gemini is more than a technical upgrade; it’s a paradigm shift in user experience. It changes the role of an Android phone from a device that executes commands to one that collaborates on complex tasks. However, this transition is not without its challenges and learning curves.

Case Study: A Day in the Life with Gemini

To understand the practical impact, consider the workflow of a marketing professional:

- Morning (Brainstorming): Instead of staring at a blank page, they can ask Gemini, “Generate five catchy headlines for a blog post about the benefits of sustainable packaging for e-commerce businesses.” They can then refine the results by saying, “Make the third one more playful and add an emoji.”

- Afternoon (Content Creation): They take a picture of a new product and ask Gemini, “Write a short, engaging Instagram caption for this photo, highlighting its eco-friendly materials. Include three relevant hashtags.”

- Evening (Planning): While reviewing a long email thread about an upcoming project, they can use Gemini’s integration with Gmail (part of the Duet AI/Gemini for Workspace feature) to ask, “Summarize the key action items and deadlines from this email chain for me.”

This demonstrates how Gemini can be woven into a professional’s daily tasks, saving time, overcoming creative blocks, and improving productivity directly from their primary device.

The Transition Pains: What’s Missing from Google Assistant?

While Gemini is vastly more capable in many areas, the initial rollout is not a complete 1:1 replacement for all of Google Assistant’s features. As of its launch, some of the deeply integrated, device-specific commands and routines that users have come to rely on are not yet fully supported in Gemini. These include:

- Routines: Complex, multi-step routines (e.g., a “Good Morning” routine that turns on lights, reads the news, and adjusts the thermostat) may not function as seamlessly.

- Media Service Integration: Specific voice commands for controlling third-party music or podcast apps might be less reliable initially.

- Reminders and Notes: Integration with Google Keep and Tasks for setting reminders via voice is still a work in progress.

Google has stated that it is actively working to incorporate these beloved Assistant features into the Gemini experience. For now, users can often switch back to the classic Assistant within the Gemini app for specific tasks, but this creates a slightly disjointed experience. This is a critical piece of Android News for power users who have built their smart home and productivity workflows around the old Assistant.

Section 4: Recommendations, Best Practices, and the Future Outlook

To make the most of this powerful new tool, users need to adapt their approach. The way one interacts with a generative AI is different from how one issues commands to a traditional assistant. Furthermore, the implications for privacy and the future of Android gadgets are profound.

Tips and Best Practices for Using Gemini

- Be Descriptive and Provide Context: The quality of Gemini’s output is directly proportional to the quality of your input. Instead of asking, “Write an email,” try, “Write a professional but friendly email to my team (John, Sarah, David) summarizing our Q2 goals, which are to increase user engagement by 15% and launch the new feature by June 1st.”

- Iterate and Refine: Don’t expect the first response to be perfect. Use follow-up prompts to tweak the results. Ask it to “make it shorter,” “adopt a more formal tone,” or “explain this like I’m a beginner.”

- Leverage Multimodality: Get into the habit of using your camera as an input device. Snap a picture of a landmark and ask for its history. Take a photo of a whiteboard after a meeting and ask Gemini to transcribe the notes and create a list of action items.

- Manage Your Privacy: Be mindful of the data you share. You can review and delete your Gemini activity history in your Google Account settings. Avoid inputting sensitive personal, financial, or proprietary information into any AI chatbot.

The Future of AI on Android

The launch of the Gemini app is just the beginning. The long-term vision is to have AI more deeply and proactively integrated throughout the Android OS. This includes the potential for on-device models (like Gemini Nano) to power features without needing a cloud connection, enhancing speed and privacy. We can expect Gemini to become the intelligent layer that connects all Android gadgets, from your phone and watch to your car and smart home devices, creating a truly ambient and cohesive computing environment. This will likely spur developers to create a new generation of AI-first applications, further cementing the importance of powerful AI on mobile platforms.

Conclusion: A New Chapter for Mobile Interaction

The arrival of Gemini on Android and iOS is far more than a simple software update; it is a landmark event that redefines the very concept of a mobile digital assistant. By replacing the command-and-response structure of Google Assistant with a powerful, multimodal, and conversational AI, Google has transformed our smartphones into collaborative partners. While the transition has some initial friction, with certain legacy features still being integrated, the potential for enhanced productivity, creativity, and problem-solving is immense. For users of Android phones, this native integration offers a glimpse into a future where our devices don’t just respond to us, but understand, anticipate, and create with us. As Gemini continues to evolve and integrate more deeply across the ecosystem of Android gadgets, it is poised to become the central nervous system of our digital lives, marking the true beginning of the mobile AI era.